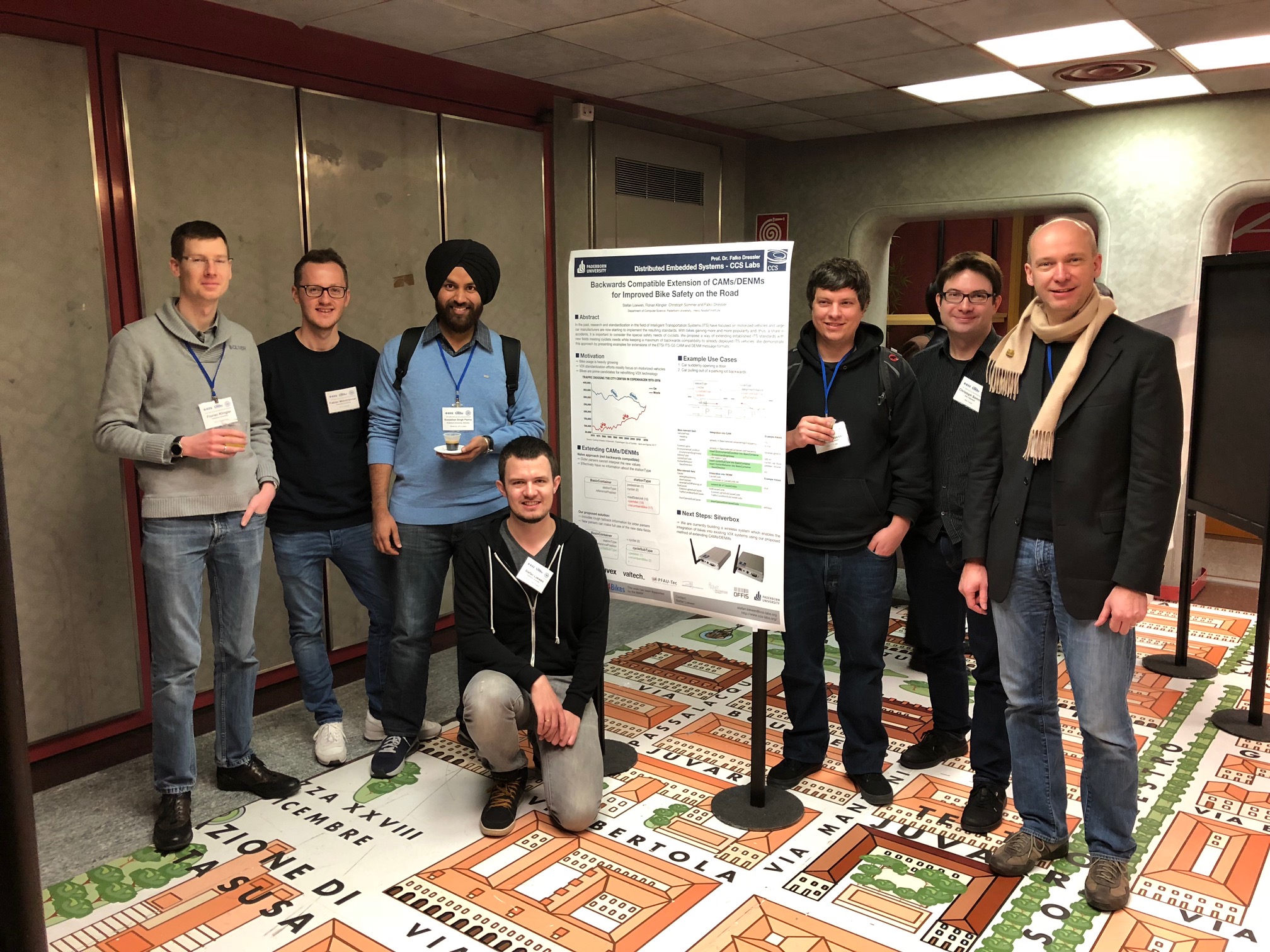

I just came back from the IEEE Vehicular Networking Conference, where I presented a paper that compares the impact of noise and interference on IEEE 802.11p.

-

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]

It was great to catch up with so many colleagues from Paderborn.

This year’s conference was all about C-V2X vs. IEEE 802.11p.

It is really interesting to see how 3GPP/industry tries to push C-V2X.

I think they are worried that the first wave of vehicular networks will use IEEE 802.11p, which could considerably delay the introduction of the cellular variant.

Today, I gave a guest lecture at the University of Paderborn, talking about the applications of SDR for wireless research.

I just noticed that researchers from the University of Minnesota used my WiFi and ZigBee transceivers to prototype their novel approach to physical-layer cross-technology communication.

Their paper won the best paper award at ACM MobiCom 2017.

Really cool!

-

Zhijun Li and Tian He, “WEBee: Physical-Layer Cross-Technology Communication via Emulation,” Proceedings of 23rd ACM International Conference on Mobile Computing and Networking (MobiCom 2017), Snowbird, UT, October 2017, pp. 2-14.

[DOI, BibTeX, PDF and Details…]

Zhijun Li and Tian He, “WEBee: Physical-Layer Cross-Technology Communication via Emulation,” Proceedings of 23rd ACM International Conference on Mobile Computing and Networking (MobiCom 2017), Snowbird, UT, October 2017, pp. 2-14.

[DOI, BibTeX, PDF and Details…]

Yay! My paper A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p was accepted for the IEEE Vehicular Networking Conference 2017.

The paper is about an experimental study that compares the impact of noise and interference on IEEE 802.11p.

Whether there is a significant difference between noise and interference is very relevant for networks simulations, which often use very simplistic simulation models for the physical layer.

If you are interested, the paper is available here, the code for the GNU Radio simulations is available on GitHub, and the modifications of the WiFi driver, will soon be available on the project website.

It’s also the first paper of my bachelor student Fabian Missbrenner.

I hope there’s more to come from him :-)

-

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]

On 29 September 2017, I will participate in Probe: Research uncovered at Trinity College Dublin.

I will be around at Café Curie where Marie Curie Fellows have the chance to chat about their work and give short talks.

Hope to see you there.

I’m very happy that my paper summarizing the implementation and validation of my IEEE 802.11a/g/p transceiver got accepted for IEEE Transactions on Mobile Computing.

When I was trying to make the WiFi spectrum visible and audible in an earlier post, I was not really happy with audio part.

Back then, I was playing some sines in ProcessingJS and did a simple ADSR (Attack-Decay-Sustain-Release) cycle per frame.

Yesterday, my friend asked me why I didn’t simply connect it to Garageband.

Good question… so I changed the implementation to send midi notes to Garageband, where it can play various synths.

And really, this is light years better.

What would you do if you were stranded on a lonely island?

I guess, learn how to prepare staple food – at least that’s what I did.

But seriously, in Ireland I just found toast, but no real bread.

After I listened to the German CRE podcast episode about bread, I gave it a try.

Still pretty bad, but already better than toast :-)

The breads are with sourdough and without yeast.

So only water, flour, and salt. Nothing else.

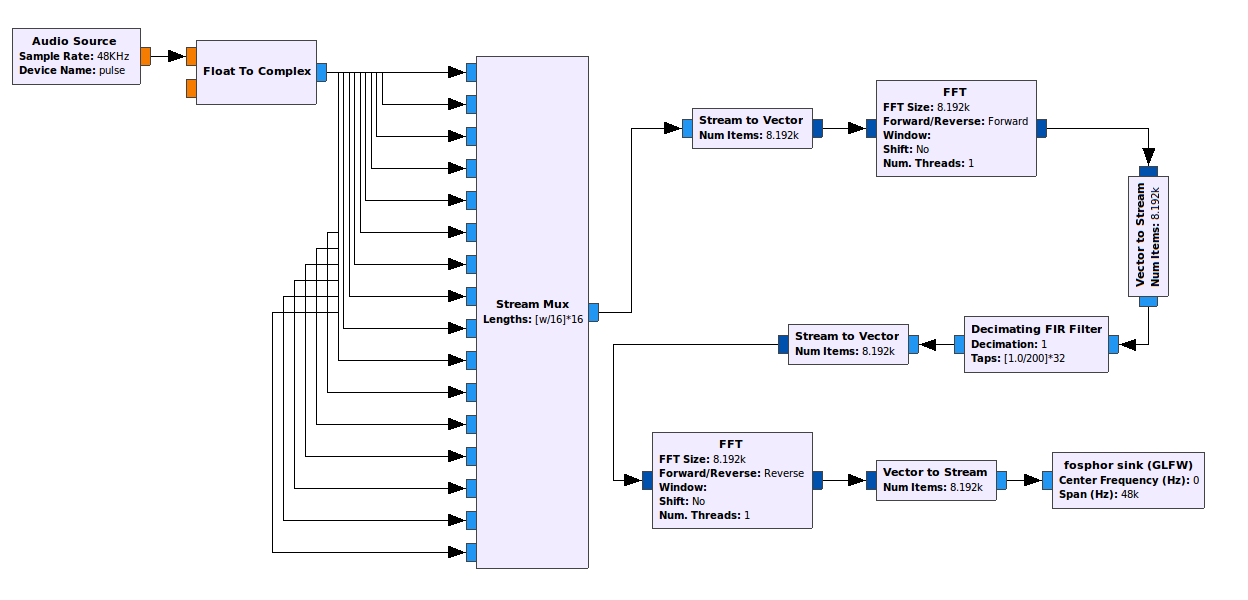

I was playing a bit around with gr-fosphor for audio.

To make it look nice, I tried to increase the number of samples per second without changing the spectral shape.

(And no, that’s not just upsampling.)

That’s probably a stupid approach, but it worked somewhat.

Marcus Mueller and I just gave a GNU Radio workshop at the Software Defined Radio Academy, held in conjunction with the HAMRADIO exhibition.

We were not sure how many people would show up since we had only 11 registrations, but, in the end, the room was completely full with about 80 people.

Great to see so much interest in SDR and GNU Radio also in the amateur radio community.

About 35 people had a laptop with them and were following us, creating an FM receiver that we successively extended for voice and, finally, APRS.

While we deliberately excluded installation, we heard that quite some people had issues.

That was not a problem for the workshop since Marcus prepared a live image that could be booted from an USB stick, but maybe we should consider making a GNU Radio install party next year.

In case you are interested, the material for the workshop is on GitHub.

I hope people had some fun at the workshop and will have a closer look at GNU Radio.

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]

Bastian Bloessl, Florian Klingler, Fabian Missbrenner and Christoph Sommer, “A Systematic Study on the Impact of Noise and OFDM Interference on IEEE 802.11p,” Proceedings of 9th IEEE Vehicular Networking Conference (VNC 2017), Torino, Italy, November 2017, pp. 287-290.

[DOI, BibTeX, PDF and Details…]